Meta Description:

Qualcomm’s new AI200 and AI250 accelerators are reshaping AI inference performance in data centers. Explore how these innovations could boost Qualcomm’s growth amid strong competition from NVIDIA, Intel, and AMD.

Will Qualcomm’s New AI Inference Solutions Boost Growth Prospects?

Qualcomm Incorporated (QCOM) has taken another big step into the world of artificial intelligence with the launch of its new AI200 and AI250 chip-based accelerator cards and racks. These new AI inference solutions are designed to enhance the performance and efficiency of AI workloads in data centers, marking a key move in Qualcomm’s growth strategy.

Powering the Next Era of AI Inference

The AI200 and AI250 solutions are powered by Qualcomm’s in-house Neural Processing Unit (NPU) technology. This innovation helps accelerate AI inference — the process of using trained AI models to make real-time decisions or predictions.

The AI250 features a near-memory computing architecture, which delivers up to 10x higher effective memory bandwidth while consuming less power. On the other hand, the AI200 offers a rack-level inference setup, making it ideal for large language models and multimodal AI tasks. Both solutions come with confidential computing for better data protection and direct cooling for thermal efficiency.

Why AI Inference Is the Future

The global AI landscape is changing. Instead of focusing only on training massive AI models, companies are now emphasizing AI inference — deploying those models efficiently in real-world applications.

According to Grand View Research, the AI inference market is expected to reach $97.24 billion in 2024, growing at a 17.5% CAGR from 2025 to 2030. Qualcomm aims to seize this opportunity by expanding its AI portfolio to serve the growing demand for scalable, affordable, and energy-efficient inference solutions.

Market Adoption and Early Success

Qualcomm’s new chips are already finding strong demand. HUMAIN, a global AI company, has adopted the AI200 and AI250 to power high-performance inference services in Saudi Arabia and other regions. This early traction highlights how Qualcomm’s products can help data centers meet performance and security needs while keeping operational costs lower.

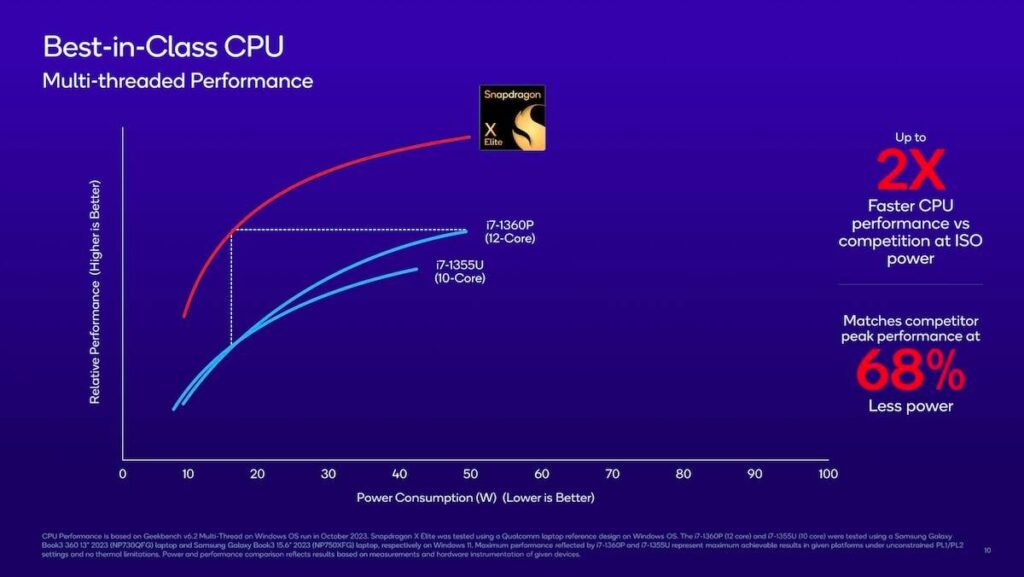

How Qualcomm Stacks Up Against Competitors

Qualcomm is entering a space dominated by giants like NVIDIA, Intel, and AMD, each with their own AI inference hardware. Here’s a quick comparison:

| Company | Key AI Product | Performance Highlights | Market Position |

|---|---|---|---|

| Qualcomm (QCOM) | AI200 & AI250 | 10x memory bandwidth, lower power use, confidential computing | Emerging challenger |

| NVIDIA (NVDA) | Blackwell, H200, L40S | High speed and efficiency across cloud and data centers | Market leader |

| Intel (INTC) | Crescent Island GPU | Optimized for inference, MLPerf v5.1 benchmark certified | Strong contender |

| AMD (AMD) | Instinct MI350 | Power-efficient cores, strong in generative AI | Rapidly growing rival |

While NVIDIA remains the dominant force, Qualcomm’s focus on energy efficiency, scalability, and affordability could help it carve a distinct position in this competitive market.

Stock Performance and Outlook

Despite the strong product innovations, Qualcomm’s stock (QCOM) has grown 9.3% over the past year, lagging behind the industry’s overall 62% growth. The company’s price-to-earnings (P/E) ratio stands at 15.73, which is notably lower than the industry average of 37.93 — suggesting the stock may be undervalued.

Earnings estimates for 2025 remain steady, while projections for 2026 have improved slightly by 0.25%, signaling stable investor confidence.

Final Thoughts

Qualcomm’s new AI inference solutions show strong potential to reshape the future of AI data centers. With high performance, cost efficiency, and advanced security, the AI200 and AI250 could play a vital role in expanding Qualcomm’s presence in the global AI market.

However, with fierce competition from NVIDIA, Intel, and AMD, Qualcomm will need consistent innovation and strategic partnerships to sustain its momentum. Still, the company’s latest move marks a promising start toward driving future growth in the AI inference era.